← Back to Projects

QuinnTests

Mentored by: Quinn

Comprehensive testing framework for software quality assurance

Python

pytest

unittest

Coverage.py

CI/CD

Docker

Description

An advanced testing framework designed to ensure software quality and reliability. Provides automated test execution, coverage analysis, and continuous integration support. Includes unit testing, integration testing, and performance benchmarking capabilities with detailed reporting and analytics.

Team Members

Cohort: Data Science Bootcamp 2025 (Data)

נ

No preview image

Responsibilities:

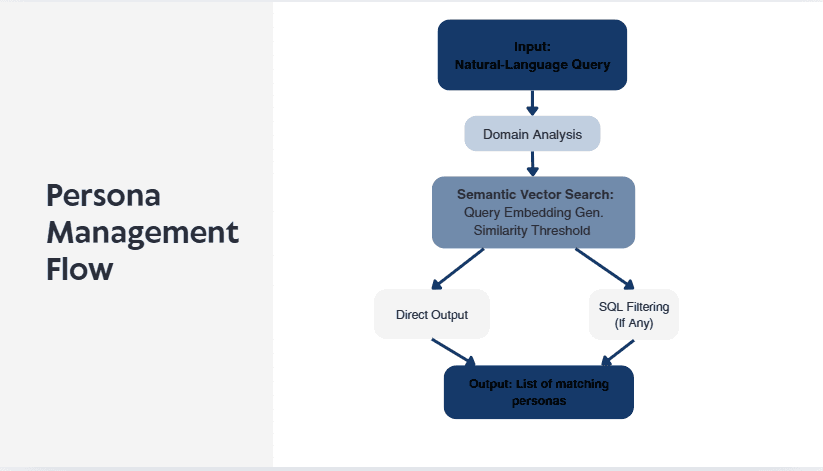

An MCP-based automated testing system for Quinn, a fintech company that developed AI-powered financial advisor. My part in the project included: Developing the full Persona Management subsystem, including building the agent and MCP tools for query analysis and microservice orchestration. Implementing an internal RAG workflow (Open AI embedding) and integrating Redis-Based Vector Search. Working with Docker Compose, FastAPI, and Redis, and writing CI/CD.

Designing and implementing a PostgreSQL database using pgvector and JSONB.

Responsibilities:

Designed and maintained a PostgreSǪL database, including schema migrations using Alembic. Designed and maintained a PostgreSǪL database, including schema migrations using Alembic.

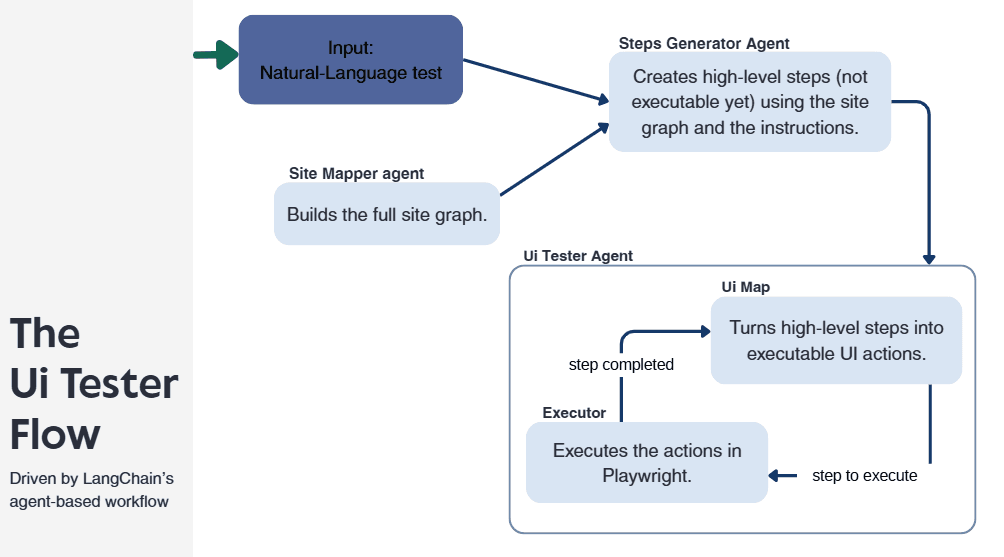

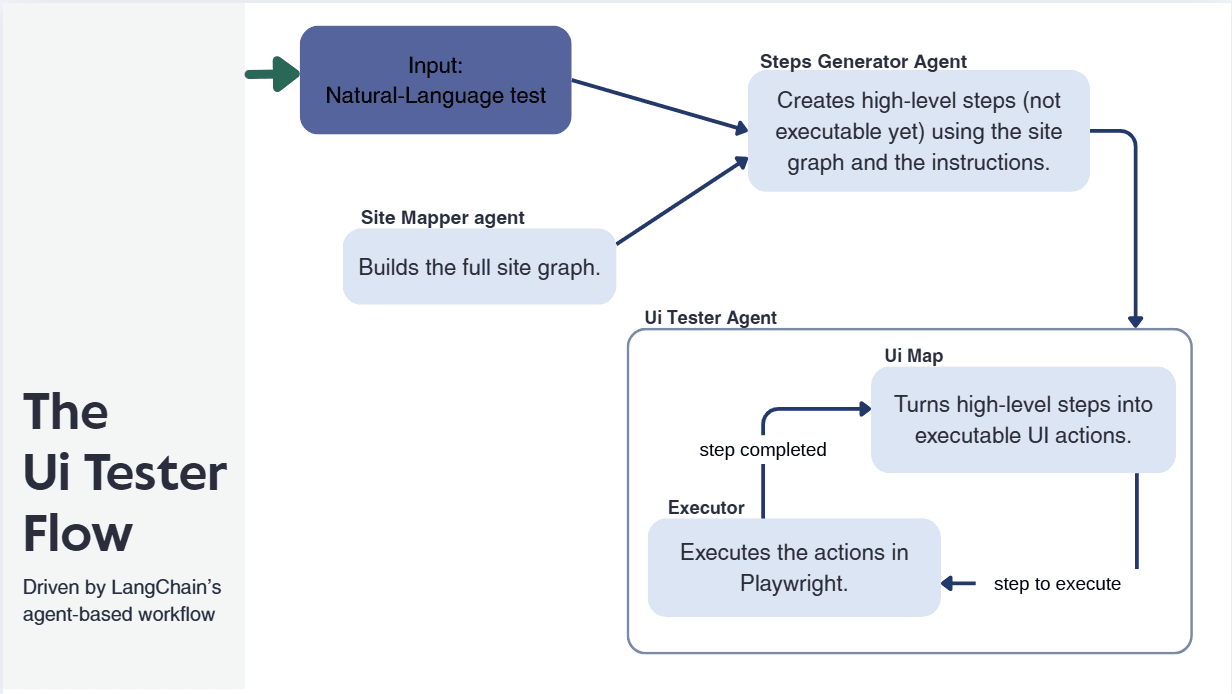

Developed the Quinn UI Tester microservice using Python, FastAPI, and Playwright MCP, enabling automated, browser-based UI tests driven by natural-language scenarios and producing reproducible, artifact-rich results (screenshots, DOM snapshots, logs) within the Quinn MCP Testing platform.

Built agent-based orchestration flows using LangChain and LangFlow.

Integrated LLMs using prompt-engineering techniques to convert natural-language test descriptions into structured steps, executed by a Playwright MCP microservice.

Implemented asynchronous task coordination between microservices.

Worked in an Agile team using Docker and Git for collaboration.

...and more contributions not listed here

Responsibilities:

create the dashboard of the project by React.

CI tests for check validation of code on docker, MCP server before merging the branch.

write with playwright automatic tests for UI tests.

Responsibilities:

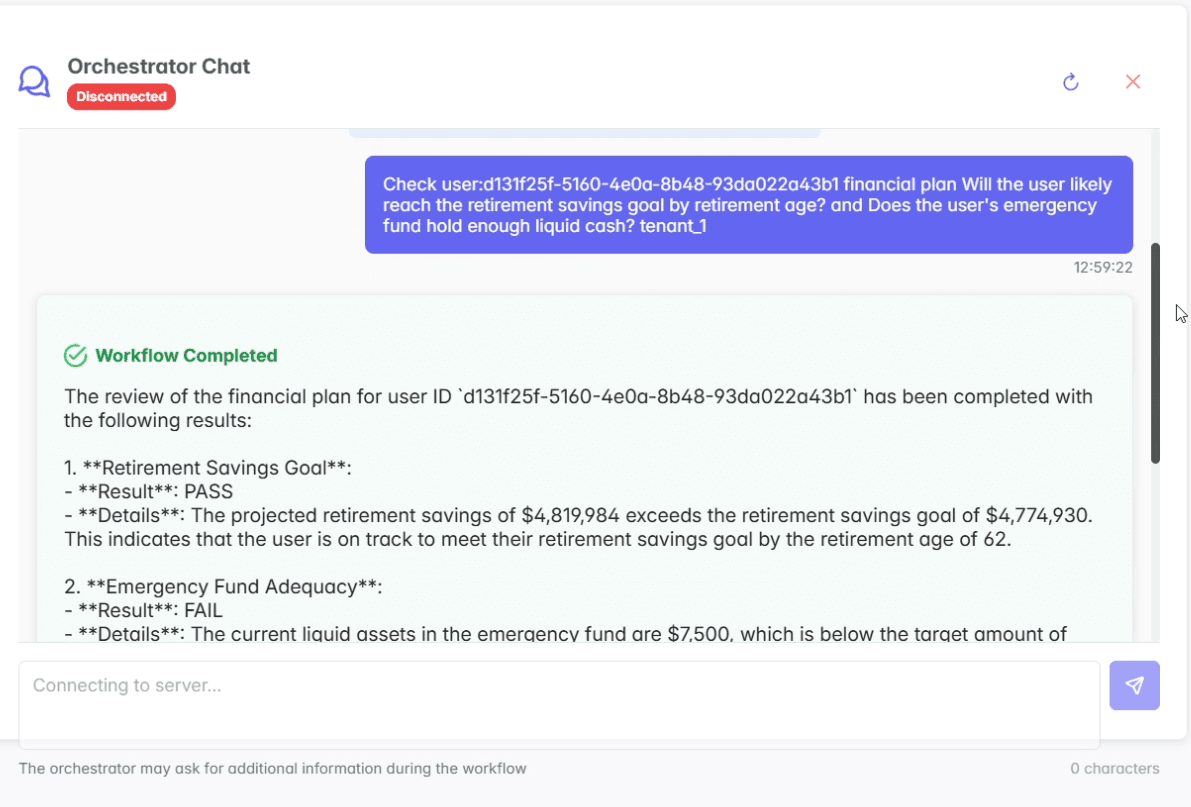

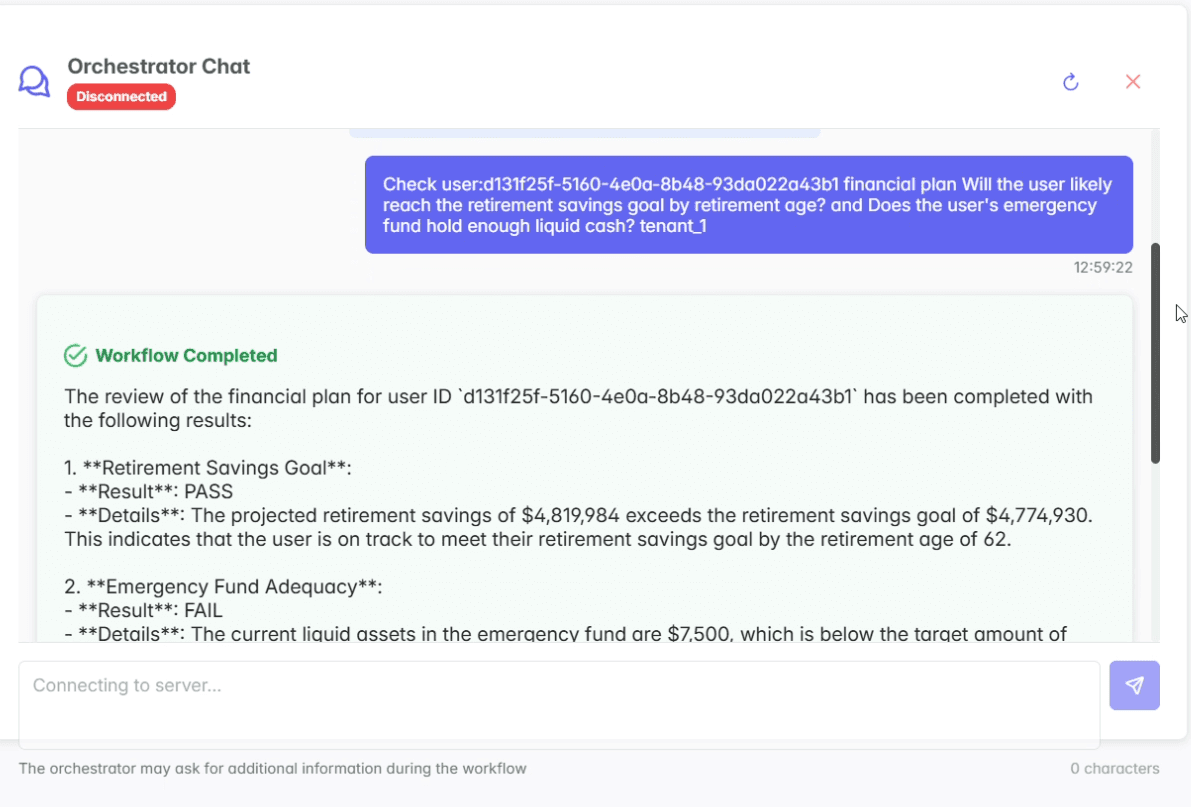

Built the initial MCP-based orchestrator using Python, FastMCP, asyncio, establishing the system’s multi-service execution architecture.

Developed a fully generic Plan Generator using Pydantic, JSON Schemas, async Python, and structured validation logic.

Built the schema-driven Plan Reviewer using Pydantic models, structured evaluators, JSON-based rule definitions, and clean async flows.

Implemented the LLM Factory using factory patterns, environment-driven config, Gemini API, OpenAI API, enabling effortless model switching.

Upgraded the orchestrator into a LangChain Agent using StructuredTools, reasoning loops, LangChain callbacks, and efficient memory handling.

Created the MCP Tools Registry with dynamic Pydantic model generation, JSON schema parsing, runtime tool injection, and LangChain integration.

Implemented the Ask-User mechanism using WebSocket, asyncio, bidirectional messaging, enabling real-time clarification mid-execution.

Integrated RabbitMQ RPC using pika, message queues, correlation IDs, distributed workers, ensuring scalable and fault-tolerant execution.

Built the Orchestrator–Workers Bridge using RabbitMQ envelopes, JSON-RPC patterns, MCP-style schemas, and unified messaging logic.

Added Redis caching + semantic caching using Redis-py, embeddings, hashed contexts, reducing LLM calls and improving runtime performance.

...and more contributions not listed here

Responsibilities:

Building tenant management whose role is to provide data on tenants and prevent unauthorized access. Built by CLI tool + FastAPI server

Adding Redis caching to tenant management to allow faster access. Access is done by decorators that refer to a quick check in the cache area in memory before an expensive access to the DB on disk.

Create a monthly scheduler to clean up the archive in the DB. Uses message queues for jobs scheduled into it by apscheduler, rq, and Redis

I designed a generic tag system that uses many-to-many relationships for cross-tagging and efficient execution of scenarios.

Creating a FastAPI server for the tagging system to provide this service to all services in the system. Provides easy access to filtering and retrieving information based on tags, as well as security for authorized access only.

Established full CI/CD pipelines via GitHub Actions for automated validation. Two tests verified using pytest: 1. Testing the syntax of .JSON files, importing pyproject.toml files. 2. Testing proper communication of all MCP servers and their tuple list using lang chain, and comparing the output to the expected output by gemini-google.

I built a video-processing component in Python that uses the MediaPipe Face Mesh model to detect facial landmarks and extract precise eye and head positions from each frame. I implemented a signal-smoothing mechanism using a five-frame moving window to stabilize rapid fluctuations in the raw landmark data and ensure clean, reliable signals before further analysis.

I added a head-pose estimation module that computes a Rotation Matrix derived from MediaPipe’s 3D facial landmarks. Using OpenCV’s Perspective-n-Point (PnP) solver, I generated the rotation matrix R and extracted Pitch and Yaw angles for real-time analysis of gaze direction. This stage established the foundation for future expansion toward full 3D orientation tracking.

I developed an attention-classification mechanism based on head angles and gaze signals, where I defined dynamic thresholds distinguishing between three attention levels: Focused, Normal, and Distracted. The classifier uses the Gaze Aversion Rate (GA Rate), the Eye-Aspect Ratio (EAR) for blink detection, and off-screen time tracking to produce an overall attention summary at the end of video processing.

I created an automated results-reporting layer in Python that stores per-frame states, aggregate statistics, and temporal patterns of attention. I integrated visualization capabilities using Matplotlib to generate graphs showing changes in attention over time, and designed the structure so it can later connect to an external dashboard or a CI/CD pipeline.

...and more contributions not listed here

Responsibilities:

Designed and maintained a PostgreSǪL database, including schema migrations using Alembic.

Built a schema-driven Plan Reviewer evaluating plans by user-defined criteria with clear, consistent structured output

Built a generic Plan Generator using Pydantic + JSON Schemas for structured, reusable, tenant-flexible plan creation.

Developed the Quinn UI Tester microservice using Python, FastAPI, and Playwright MCP, enabling automated, browser-based UI tests driven by natural-language scenarios and producing reproducible, artifact-rich results (screenshots, DOM snapshots, logs) within the Quinn MCP Testing platform.

Responsibilities:

Persona Ingestion & Embedding: Built a CLI and Persona-to-Vector service to batch-insert personas into PostgreSQL table, generating semantic embeddings with OpenAI embedding-3-small model and storing them via pgvector transactionally.

Persona Search & Filtering: Developed the Persona Search MCP service that receives persona-specific queries from the orchestrator, performs semantic search with SQL filters, and applies relaxed filtering to ensure results.

Semantic Caching & Optimization: Implemented a Redis-based semantic cache to return results for similar persona descriptions, reducing processing time for LLM-driven vector search.

Orchestrator (Initial Implementation): Developed the first version of the orchestrator using an LLM MCP client to coordinate test workflows across microservices, handling server sequencing, argument mapping, and returning results; later refined by other team members.

Extended Logging Infrastructure: Enhanced FastMCP’s context class to save critical logs to configurable files with rotation, improving traceability and observability across services.

...and more contributions not listed here

S

No preview image

Responsibilities:

Designed and built the central Orchestrator, responsible for coordinating all MCP tools, managing the execution flow, and controlling the entire system logic.

Integrated LangChain Agents to enable context-aware reasoning, autonomous tool selection, and a stable, reliable conversational workflow with the LLM.

Developed a real-time WebSocket interaction channel, allowing the system to request missing information from the user and resume execution seamlessly.

Implemented massive parallel test execution using RabbitMQ, including distributed workers for the Orchestrator and each MCP, supporting hundreds of concurrent test flows.

Engineered advanced state-management and recovery mechanisms, enabling the system to pause, request additional inputs, and continue from the exact previous state without losing context.

אינטגרציה מתקדמת עם LangChain ו-Agent – מעבר מניהול ידני של ההיסטוריה למערכת שמבינה את ההקשר, מזהה חוסרים, ולומדת מהטעויות של עצמה בזמן אמת.

תזמון אסינכרוני חכם עם RabbitMQ – תכנון זרימה שמאזנת עומסים ומפחיתה צווארי בקבוק, תוך יכולת הרחבה כמעט בלתי מוגבלת של מספר Workers.

ניתוח והשלמת נתונים אוטומטית – מנגנון שבו המערכת מזהה נתונים חסרים בשאלה, מבקשת אותם מהמשתמש, וממשיכה מהנקודה המדויקת בה נעצרה.

אופטימיזציה חכמה של משאבים ורכיבים – מנגנון שמחליט מתי להפעיל אילו כלי MCP, לפי עומס, זמינות ותעדוף, כדי להבטיח תגובה מהירה ויעילה.

פיתוח מנגנון ניטור ובקרה בזמן אמת – מערכת שמנטרת כל שלב בפלוו, מזהה חריגות, ומספקת תובנות לגבי ביצועים ואיכות התשובות, כולל לוגים ברמה גבוהה שניתן להציג בפני צוות או מראיינים.

...and more contributions not listed here