← Back to Projects

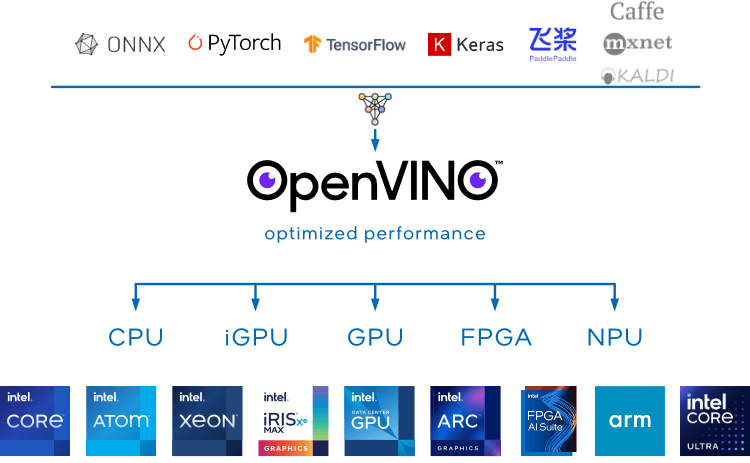

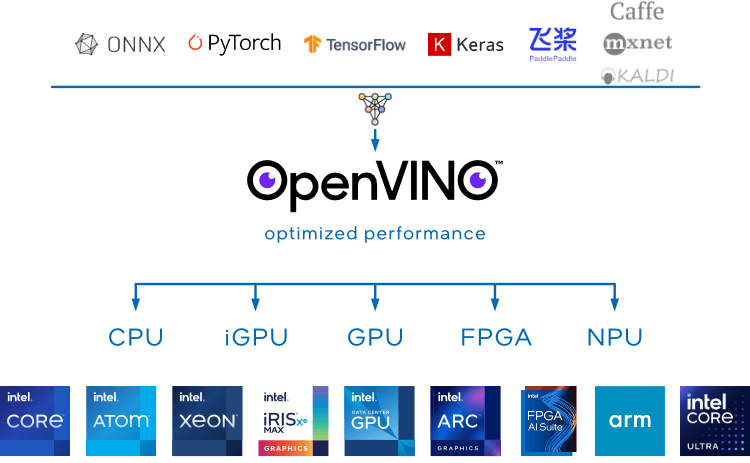

OpenVINO

Mentored by: Mobileye

Model optimization and performance contributions

C++

Python

OpenVINO Runtime

PyTorch Frontend

INT8/FP16 Quantization

Description

Contributions to OpenVINO PyTorch Frontend: added missing operators (smooth_l1_loss, fake quant ops, quantile, linalg_multi_dot), debugging system design, INT8/FP16 benchmarking (e.g., EfficientNet-V2-XL), and integration across Model Optimizer and Runtime. Includes operator validation, code-path debugging, and cross-framework consistency improvements.

Team Members

Cohort: Embedded Systems Bootcamp 2025 (Embedded)

Responsibilities:

Executed systematic experiments to evaluate model performance using Batch Size, nstreams, nireq, and CPU pinning. Compared Precision Modes (FP32, FP16, INT8, Mixed Precision) with quantization and post-training optimization. Measured and visualized key metrics such as Latency, FPS, and Memory Footprint, generating histograms and comparative graphs to analyze trade-offs between accuracy and efficiency.https://github.com/TamiShaks-2

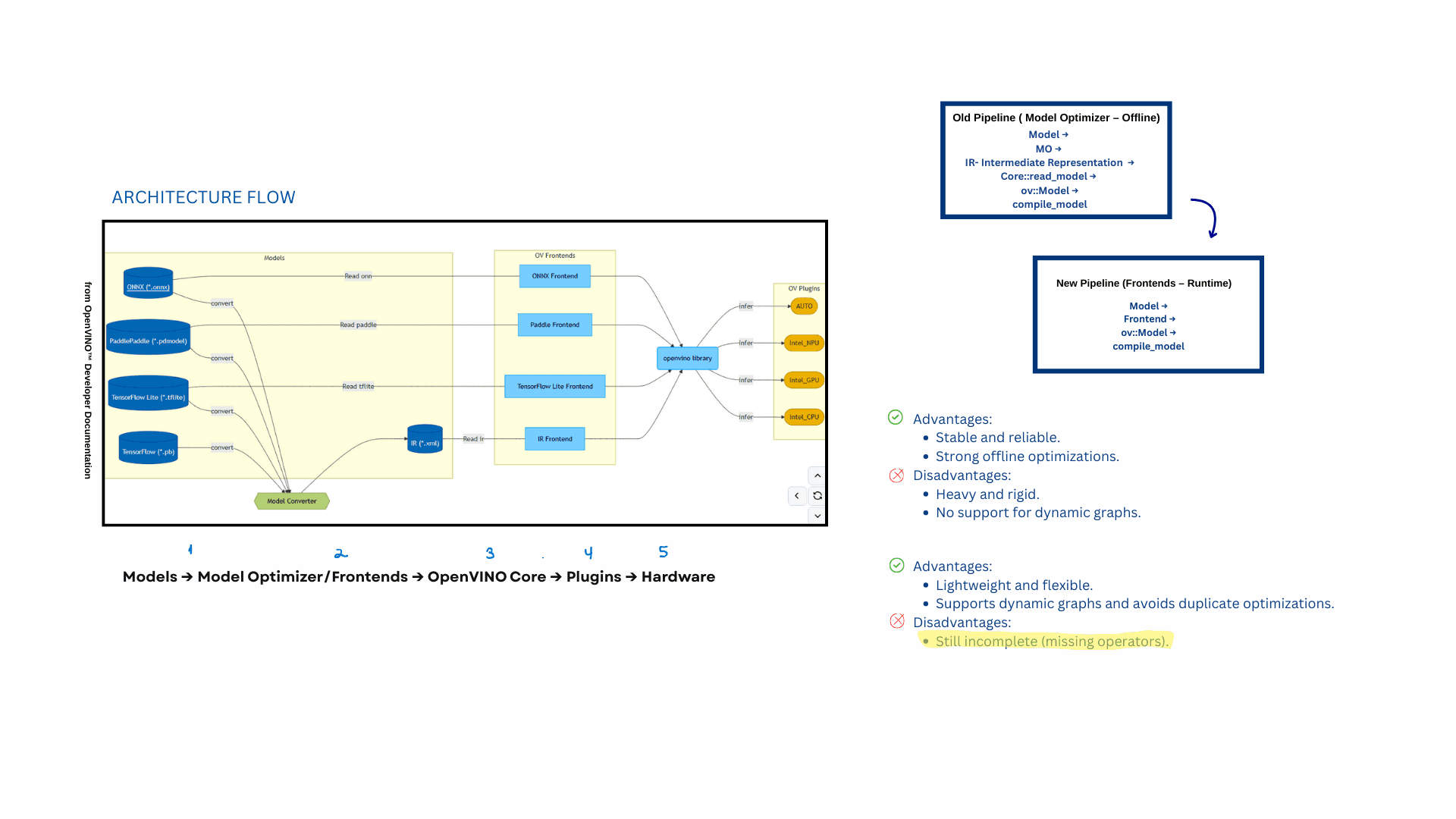

Researched Intel OpenVINO architecture (Frontend, Core, Runtime) and neural network computation flow. Gained hands-on understanding of graph representation (IR), model conversion, and optimization pipelines from PyTorch to OpenVINO.

Processed the COCO dataset, performed format conversion (COCO → YOLOv9), and created customized YAML configuration files for experiments. Executed YOLOv9-Seg and EfficientNetV2 models using OpenVINO Runtime, analyzed Latency and Throughput metrics, generated histograms and performance graphs, and optimized inference efficiency across different hardware configurations.

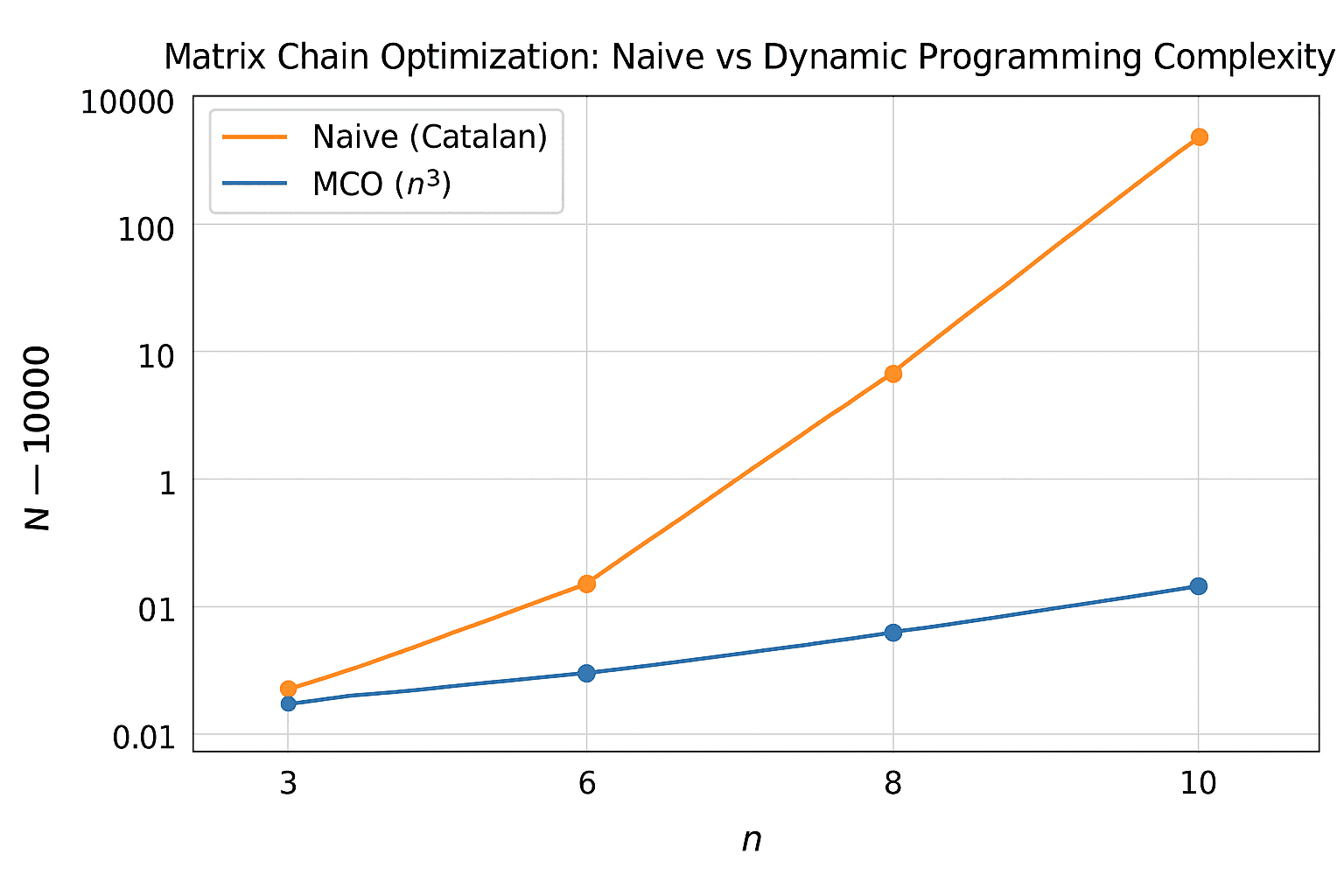

Implemented the linalgmultidot operator in the OpenVINO PyTorch Frontend using Matrix Chain Optimization (MCO). Achieved ~35% reduction in computation cost for multi-matrix operations. Ensured support for dynamic shapes, 1D edge cases, and consistent dtype handling.

Developed and ran automated PyTest test suites to validate the correctness, stability, and performance of the linalgmultidot operator. Designed tests for various matrix shapes, heuristics, and dynamic dimensions to ensure numerical equivalence between PyTorch and OpenVINO outputs. Used assertions and graph-structure validation to verify optimal MatMul order and sub-product formation.

Configured multi-layer debugging using VS Code + GDB for cross-language tracing between Python and C++. Monitored operator calls through PyBind11, managed breakpoints across OpenVINO’s frontend and core layers, and created a custom launch.json for reproducible debugging sessions.

...and more contributions not listed here

Responsibilities:

Conducted applied research in quantization and performance optimization for deep-learning models using OpenVINO. Evaluated the impact of precision modes, dynamic shapes, and optimization passes on inference efficiency. Measured latency, throughput, and memory footprint across hardware configurations to understand trade-offs between accuracy and performance.

Executed YOLOv7 object-detection experiments, including preprocessing, model conversion, inference benchmarking, and systematic performance validation. Analyzed runtime behavior across CPU and GPU devices and used profiling tools to visualize bottlenecks and guide optimization choices.

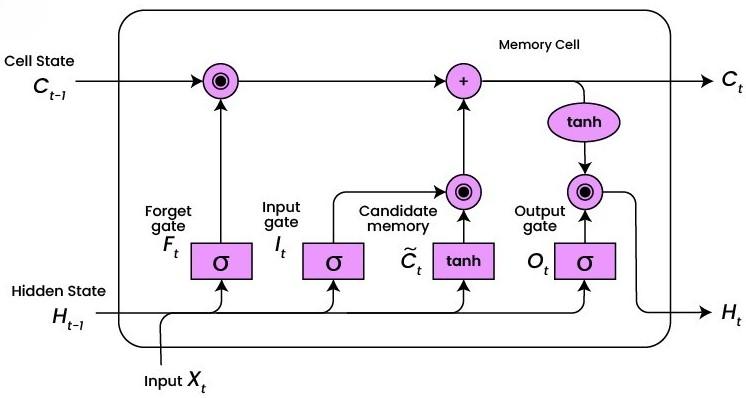

Support for aten::lstmcell Implemented full operator support for aten::lstmcell within the OpenVINO PyTorch Frontend. Developed translator logic, shape-inference rules, internal validation steps, and graph-construction flows. Ensured correct handling of dynamic shapes, hidden-state initialization, and multi-directional scenarios. Verified compatibility with existing OpenVINO operations and integration with the model-conversion pipeline.

Support for prim::tolist Added support for the prim::tolist operator, enabling correct lowering of list-conversion operations encountered in TorchScript/FX graphs. Built translator logic to manage tensor-to-list transformations, validated type behavior, and ensured consistent handling across dynamic inputs and nested list structures.

Debugged complex TorchScript and FX graphs, tracing conversion failures through multiple layers of the model-loading pipeline. Identified unsupported patterns, resolved mismatches between PyTorch and OpenVINO operator behavior, and refined graph-building logic to ensure successful end-to-end model conversion.

Developed unit tests and layer-test suites to validate operator correctness on CPU and GPU. Designed test coverage for diverse tensor shapes, data types, and dynamic-dimension scenarios. Verified numerical equivalence between PyTorch execution and OpenVINO inference, ensuring robustness across the operator infrastructure.

Solved algorithmic challenges in C, C++, and Python, applying data-structure fundamentals such as arrays, linked lists, hash maps, dynamic programming, recursion, and bitmasking. Performed complexity analysis, debugging, and unit-test development to ensure correctness and performance of implemented solutions.

...and more contributions not listed here

Responsibilities:

Conducted research on quantization techniques for advanced vision models (ResNet-50, EfficientNet-XL, YOLOv8), including FP32→INT8/FP16 conversions and developing mixed-precision methods to enhance computational efficiency.

Developed a benchmarking infrastructure for CPU/GPU execution, measuring latency and throughput and tuning batch size, nstreams, and nireq to optimize real-time inference performance.

Gained deep understanding of OpenVINO’s architecture—Model Optimizer, IE Core, Frontends, Runtime, and the Intermediate Representation (IR)—and its role within the model pipeline.

Implemented new PyTorch Frontend operators such as fakequantizelearnable and SmoothL1Loss, performed numerical validation and unit testing, and submitted an official PR.

Performed operator lowering and translated custom operators into ov::Model, ensuring precise shape inference and stable integration across the PyTorch→OpenVINO execution flow.

Authored an official Debug Guide documenting Source Build setup, configuration workflows, and VS Code integration, establishing standardized debugging practices for the development team.

Executed cross-language debugging between Python and C++ using pybind11, analyzing runtime issues, signal handling, and attach/launch debugging scenarios to improve system stability.

...and more contributions not listed here

Responsibilities:

Quantization & performance analysis of AI inference models across CPU/GPU

Benchmarking latency, throughput, memory usage, and system-level behavior

Researching accuracy vs performance trade-offs in quantized models

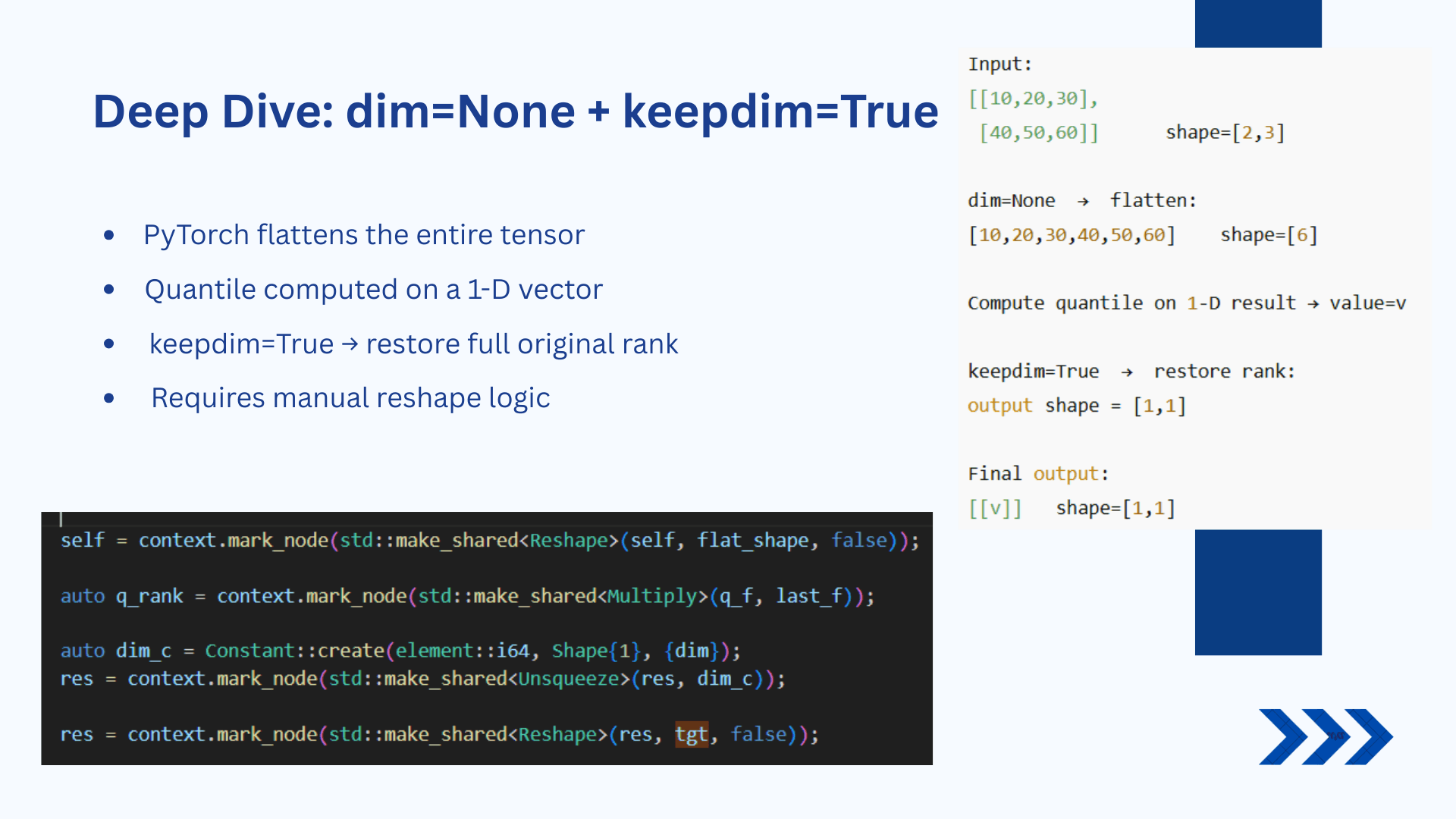

Implemented the full aten::quantile operator in the OpenVINO PyTorch Frontend — including operator translation, shape-inference logic, broadcasting-safe reshaping, all interpolation modes, support for dim=None and keepdim, NaN-propagation handling, performance optimizations (TopK/Gather), and full numerical validation against PyTorch.