← Back to Projects

llama.cpp

Mentored by: Mobileye

Extending CPU/GPU LLM inference kernels

C++

CUDA

SYCL

GGML/GGUF

LLM inference optimization

Description

Enhancements to llama.cpp including adding missing operator kernels to SYCL/CUDA backends (ROLL, SSM_CONV, SET, CONV3D), profiling improvements, GGUF conversion changes, and enabling multimodal Eagle2-VL support. Work focuses on optimized token processing and backend-level operator coverage to improve inference throughput.

Team Members

Cohort: Embedded Systems Bootcamp 2025 (Embedded)

Responsibilities:

Operator Implementation: Implemented missing SYCL operators, such as repeat_back, ABS, and ELU, with full alignment to ggml’s data structures and correct execution on both GPU and CPU.

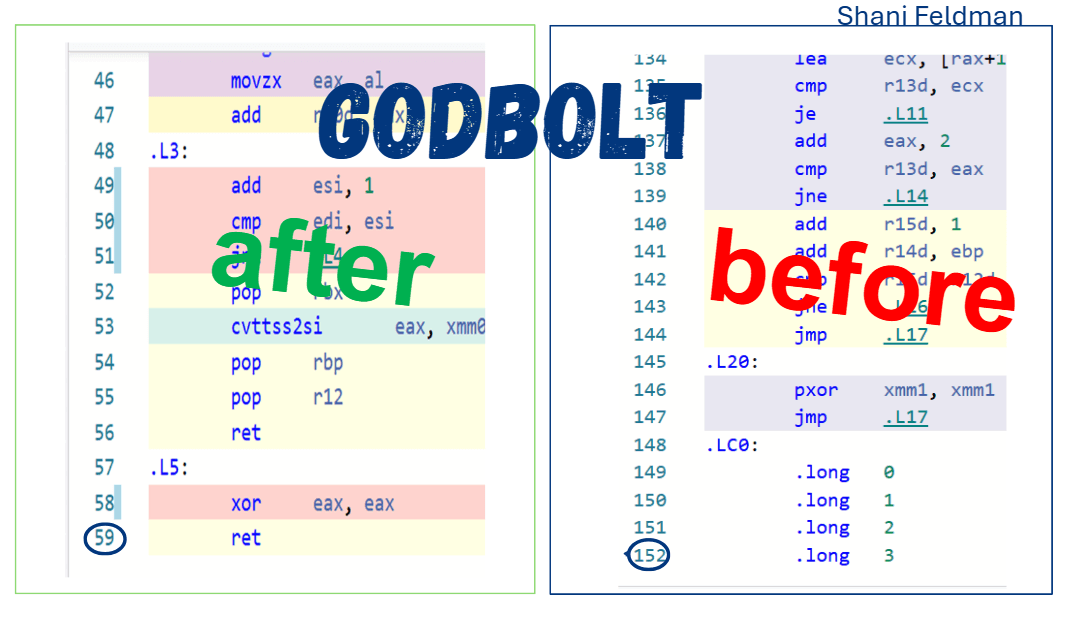

GPU Optimization: Performed advanced GPU optimizations for the operators, resulting in a significant reduction in the number of assembly instructions and achieving a 2.57× performance improvement.

Generic Kernel Development: Developed a single generic SYCL kernel supporting a large set of operators, reducing code duplication, improving maintainability, and enabling faster backend expansion.

Upstream Integration: All implementations successfully passed code review and were fully merged into the main llama.cpp repository.

Responsibilities:

Contributed GPU implementations for the missing ROLL and SSM_CONV operators in C++/SYCL, including optimized parallel kernels and a custom 4D→3D indexing scheme adapted to GPU hardware limits, achieving ~200–300% performance improvement compared to the previous CPU fallback.

Developed full integration for the Megrez-MoE model: analyzed the HuggingFace structure, mapped all tensors to llama.cpp’s GGUF architecture, and implemented model components according to existing design patterns.

Extended the HuggingFace → GGUF conversion pipeline by adding missing tensor mappings, metadata fields, and architectural parameters, enabling correct conversion, loading, and execution on both CPU and GPU.

Worked extensively with Git, maintained multiple Pull Requests, and contributed code to a large- scale open-source project used worldwide.