← Back to Projects

AgDetection

Mentored by: Applied Materials

A unified evaluation platform for ML models across multiple benchmarks

Python

PyTorch

ONNXRuntime

SQLite

MinIO

gRPC

Docker

PyQt6

Description

A system that standardizes model evaluation for classification, detection, and segmentation tasks. Includes ONNX-first architecture, dataset adapters, incremental evaluation engine, batch-size optimization, distributed caching, GUI comparison tools, and multi-framework support.

Team Members

Cohort: Data Science Bootcamp 2025 (Data)

T

No preview image

Responsibilities:

Architected the overall evaluation flow, separating runners, dataset adapters, metric computation, and persistence into clear layers to keep the system scalable and easy to extend with new models and benchmarks.

Set up a Docker-based environment that packages backend services, the database, and supporting tools into a reproducible, one-command setup for local development and demos.

Implemented the runner layer that orchestrates the full evaluation flow – loading models, running inference, aggregating results, and computing detection/classification/segmentation metrics across benchmarks.

Managed and authored PostgreSQL schema design, data ingestion, and Alembic migrations, using MinIO for persistent storage.

Implemented a robust model-to-benchmark class-ID mapping layer to align dataset labels with canonical classes and ensure consistent evaluation across datasets and experiments.

Fine-tuned and compared YOLO-based detection models on project datasets using transfer-learning techniques (freezing the backbone, training detection heads, and then gradually unfreezing layers), and evaluated their performance and trade-offs with PyQt-based experiment visualizations.

Research: Conducted research and delivered a presentation on large-language-model evaluation metrics, comparing different approaches and their implications for real-world use.

...and more contributions not listed here

Responsibilities:

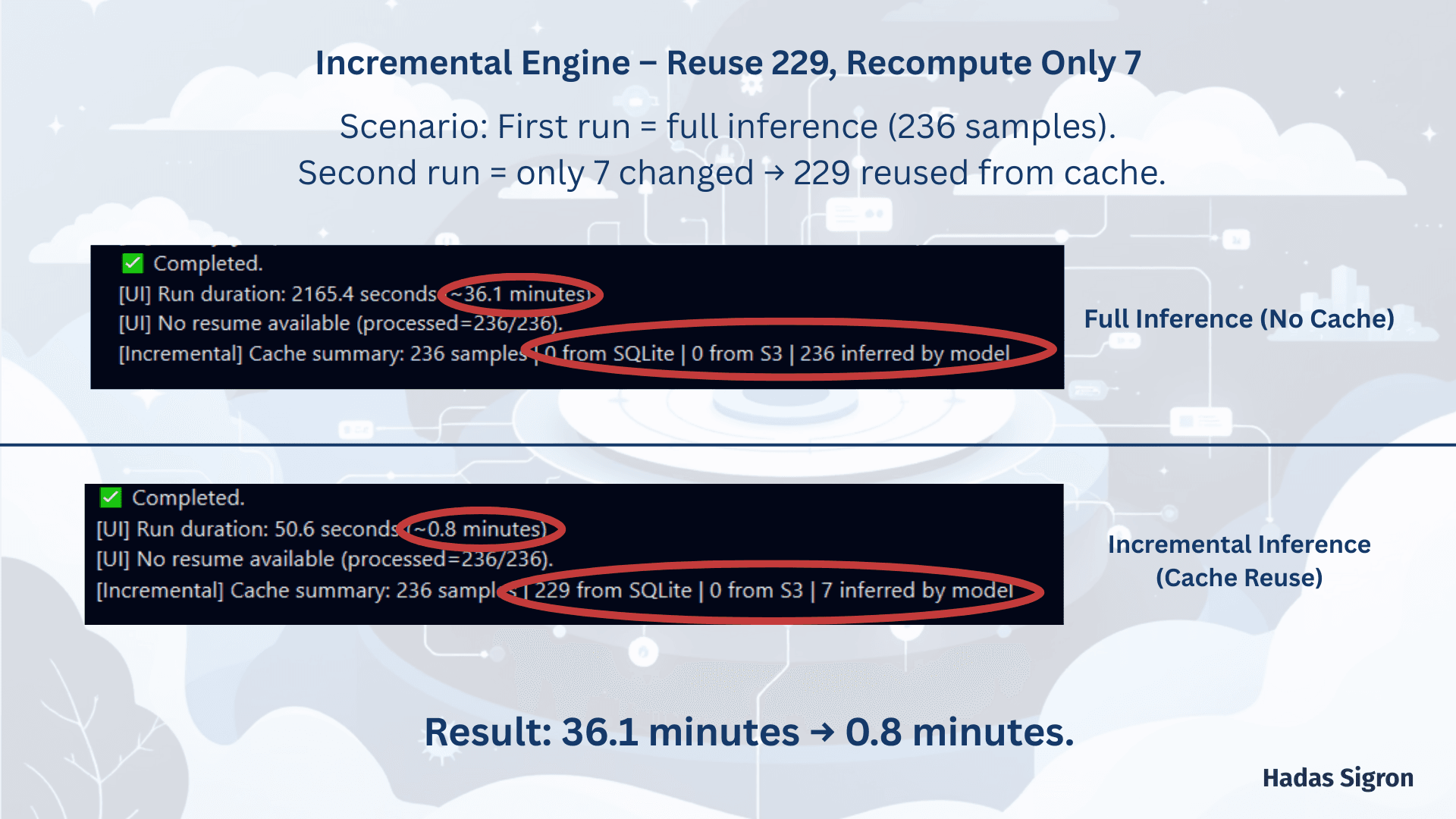

Incremental Evaluation Engine-Developed an incremental evaluation engine that accurately detects changes between runs using hashing of samples, model, and configuration, and skips redundant inference. The engine uses a dual-layer caching mechanism — Local (SQLite) for fast lookups during execution, and Distributed (MinIO/S3) for persistence and sharing of results across runs and environments — reducing repeated run times by up to ~80%, with seamless integration across all Runners and task types.

Runners & Dataset Adapters Architecture - Development of a modular evaluation architecture built on a dedicated Runner layer for each task (Classification / Detection / Segmentation), all sharing a unified run() interface while implementing task-specific execution logic. In parallel, a Dataset Adapter layer was designed to normalize multiple data formats (COCO / YOLO / VOC / image folders) into a standard structure, enabling any model to be paired with any dataset without code changes. This approach created a flexible, extensible system that fully supports “Plug and Play” integration of new models and datasets.

Smart Resume-Implementation of an intelligent Resume mechanism that tracks run progress at batch-level resolution (DONE/PENDING) in the database, enabling execution to continue exactly from the batch where it stopped in case of a crash or intentional interruption — without restarting the process. This approach improves stability and saves significant time when working with large datasets and heavy models.

Research : Development of an automatic Batch Size Optimizer for Vision model inference, incorporating latency/throughput/memory profiling, warmup runs, stability testing, and a combined exponential + binary search for fast and accurate batch-size tuning. The component was designed as a performance layer within the evaluation pipeline, providing clear insights into optimal resource utilization and enabling smarter, more efficient model execution.

Responsibilities:

Developed enhanced GrabCut segmentation algorithm with automatic ROI detection and intelligent post-processing.

Created extensible plugin system enabling Python-based algorithms to run transparently alongside AI models with identical metrics.

Built full-stack architecture with FastAPI backend supporting 500+ concurrent clients, PyQt6 desktop application with async workers.

Implemented distributed task queue system using Celery and Redis with 9 specialized workers, NGINX load balancing, and advanced health monitoring.

Deployed complete production stack with Docker Compose.

...and more contributions not listed here

Responsibilities:

Developed a comprehensive evaluation and benchmarking framework for computer-vision models, enabling reproducible experiments and consistent metric tracking across classification, detection, and segmentation tasks.

Implemented a unified evaluation pipeline with modular dataset adapters, a typed AppConfig configuration system, and a standardized Runner architecture that ensures deterministic, task-aligned execution.

Designed an ONNX-first workflow featuring automatic format detection, model conversion, and inference-parity validation, enabling consistent and optimized evaluation regardless of input model format.

Extended the system with a flexible plugin mechanism supporting both deep-learning models and classical algorithms.

Architected backend components using PostgreSQL, MinIO (S3), Redis, Celery, and Docker, supporting multi-client workload distribution, artifact persistence, and scalable execution.

Engineered an incremental evaluation engine incorporating per-sample hashing, smart caching, and partial-resume capabilities, significantly reducing repeated runtime and improving overall throughput.

Integrated the evaluation pipeline into a full PyQt desktop application, connecting model upload, benchmark selection, configuration overrides, batch-based progress reporting, live logs, and dynamic metric comparison tables.

Research: Conducted an in-depth study of Transformer architectures, including attention mechanisms and token-processing workflows. Leveraged this research to implement an LLM-based Intent Router that maps free-text instructions into task, domain, and benchmark selections, enabling automated configuration from natural-language input.

Delivered an extensible, scalable, and production-oriented evaluation platform with consistent metrics, artifact exporting, and cross-benchmark comparison capabilities.

...and more contributions not listed here