← Back to Students

Hadas S.

Bio

Software engineer with expertise in Computer Vision, ML, Full-Stack, and DevOps. Experienced in developing end-to-end systems. Fast learner with a creative, motivated mindset.

Skills

Python

PostgreSQL

MinIO

Docker

CI/CD

FastAPI

ONNX

CUDA

TensorFlow

PyTorch

SQLite

Bootcamp Project

A unified evaluation platform for ML models across multiple benchmarks

Mentored by: Applied Materials

Data Science Bootcamp 2025 (Data)

Responsibilities:

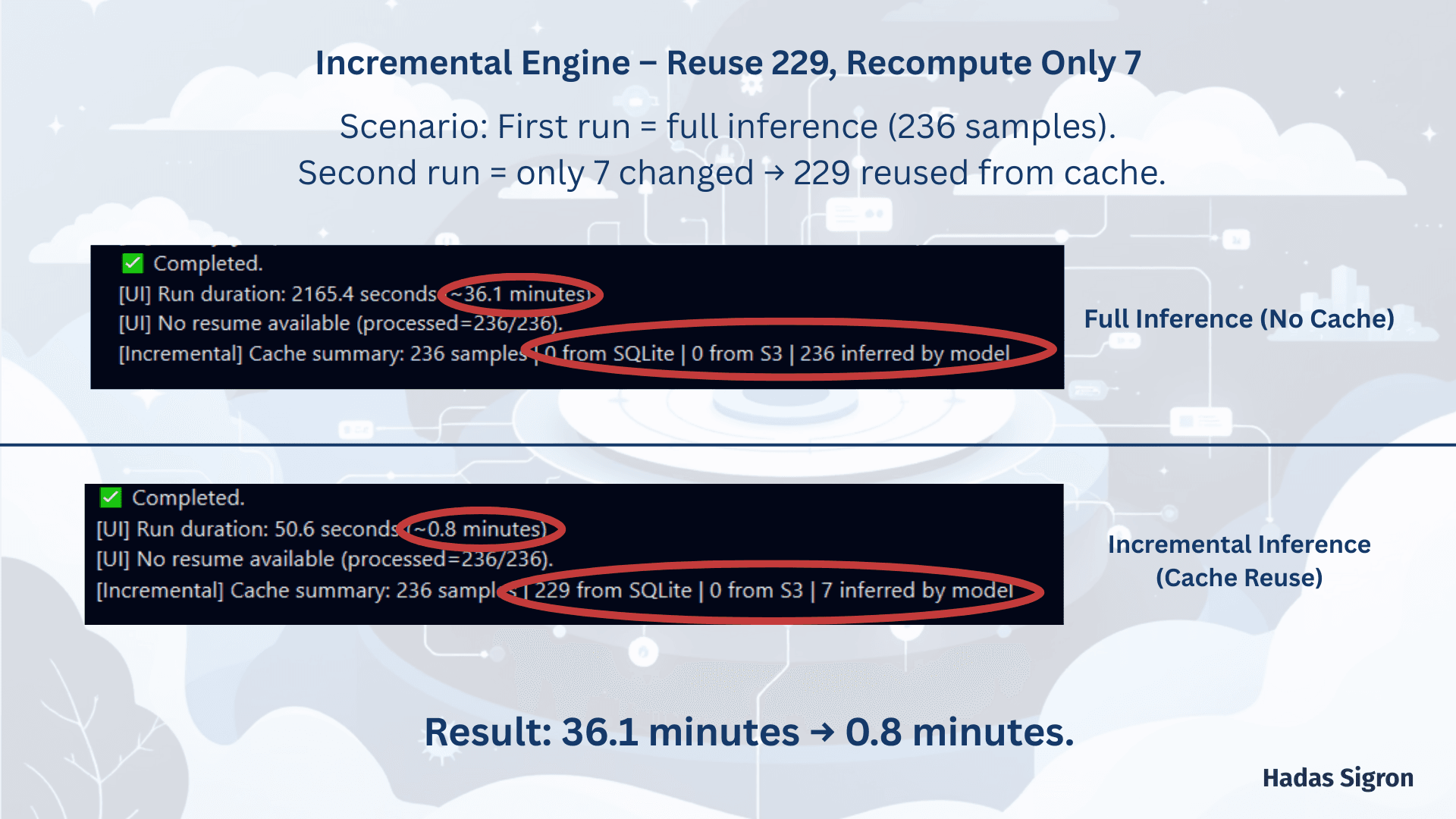

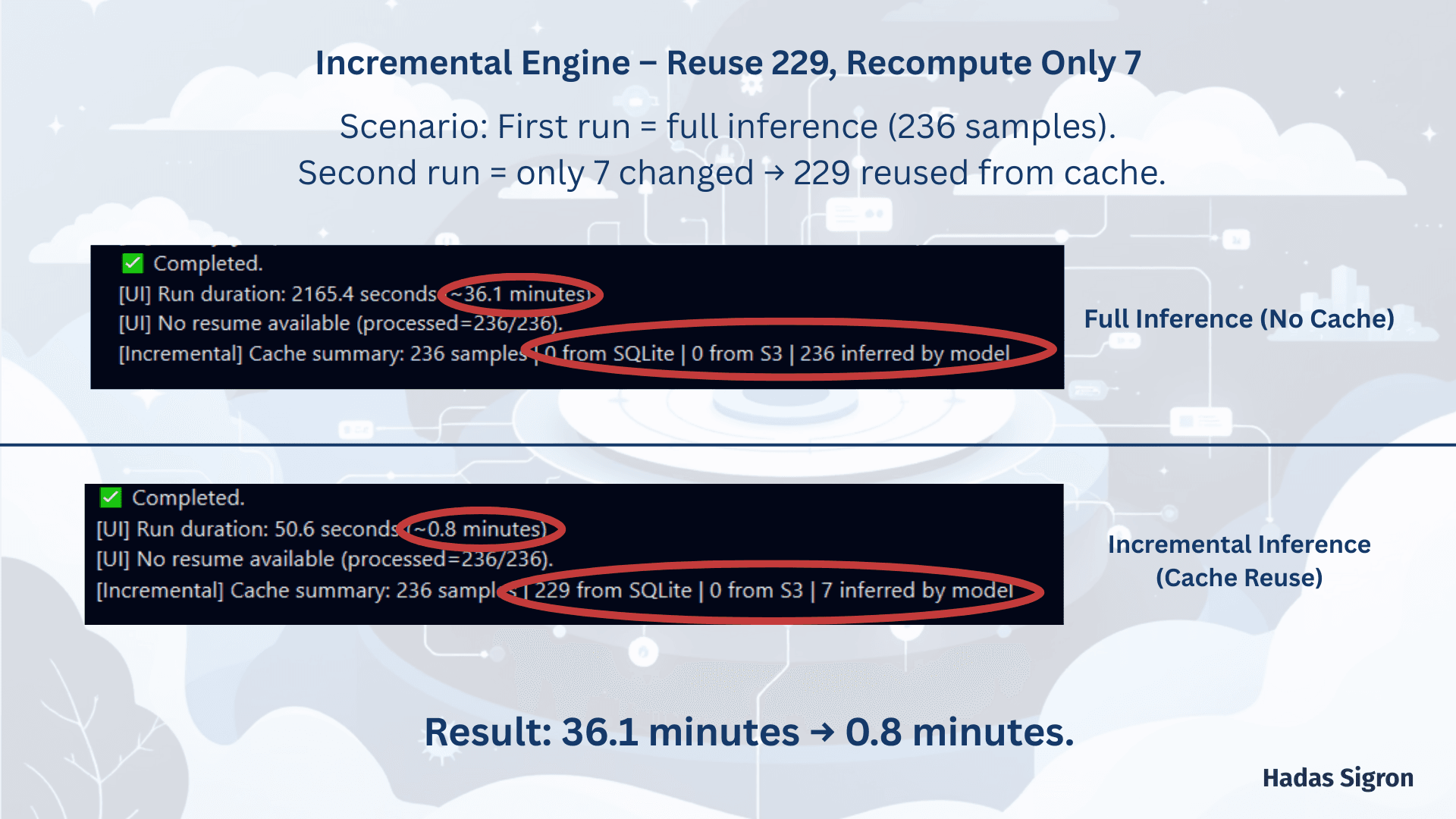

Incremental Evaluation Engine-Developed an incremental evaluation engine that accurately detects changes between runs using hashing of samples, model, and configuration, and skips redundant inference. The engine uses a dual-layer caching mechanism — Local (SQLite) for fast lookups during execution, and Distributed (MinIO/S3) for persistence and sharing of results across runs and environments — reducing repeated run times by up to ~80%, with seamless integration across all Runners and task types.

Runners & Dataset Adapters Architecture - Development of a modular evaluation architecture built on a dedicated Runner layer for each task (Classification / Detection / Segmentation), all sharing a unified run() interface while implementing task-specific execution logic. In parallel, a Dataset Adapter layer was designed to normalize multiple data formats (COCO / YOLO / VOC / image folders) into a standard structure, enabling any model to be paired with any dataset without code changes. This approach created a flexible, extensible system that fully supports “Plug and Play” integration of new models and datasets.

Smart Resume-Implementation of an intelligent Resume mechanism that tracks run progress at batch-level resolution (DONE/PENDING) in the database, enabling execution to continue exactly from the batch where it stopped in case of a crash or intentional interruption — without restarting the process. This approach improves stability and saves significant time when working with large datasets and heavy models.

Research : Development of an automatic Batch Size Optimizer for Vision model inference, incorporating latency/throughput/memory profiling, warmup runs, stability testing, and a combined exponential + binary search for fast and accurate batch-size tuning. The component was designed as a performance layer within the evaluation pipeline, providing clear insights into optimal resource utilization and enabling smarter, more efficient model execution.

Click to enlarge

Additional Projects

-Smart Wardrobe- Developed a digital-wardrobe system supporting clothing item uploads, automatic color detection using image analysis and K-Means, smart categorization, dynamic outfit generation and sharing, and user access control. The platform applies data-driven logic and intelligent matching components to enable efficient and interactive wardrobe organization.

-DevOps Automation- A DevOps project implementing a full CI/CD pipeline for the WorkProfile application, including automated testing, Docker image builds, and continuous deployment.

The system is deployed on Kubernetes with persistent database storage, using GitHub Actions and Docker for full automation.

English Level

Working Proficiency