← Back to Projects

NanoVerse

Mentored by: Mobileye

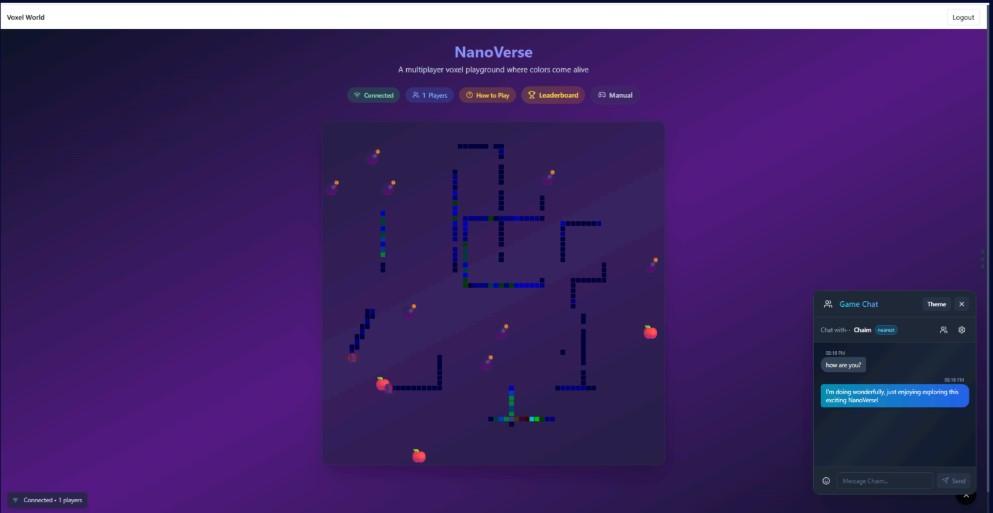

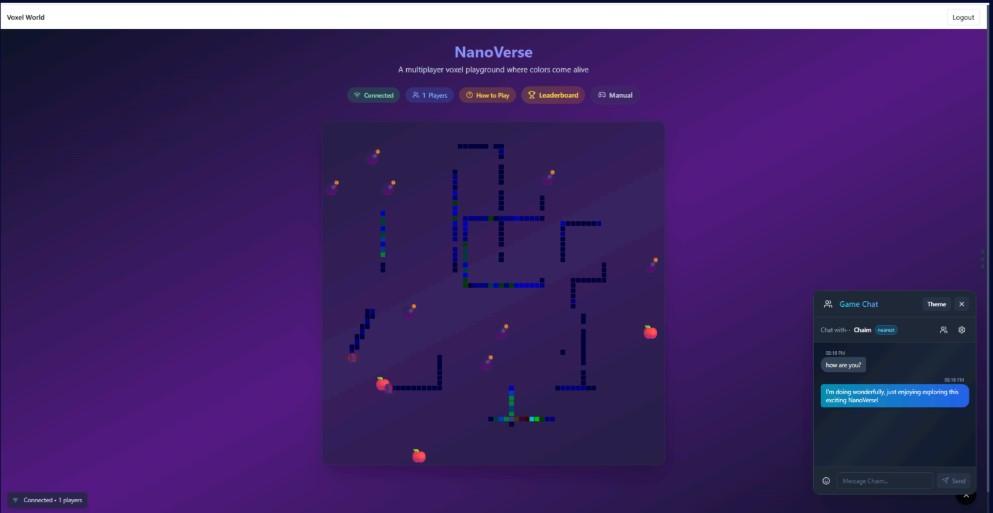

A persistent, AI-driven multiplayer voxel world

Python

Node.js/Game engine

GRU

Gemma-3

LoRA

DPO

YOLO

Quantization

WebSockets

Chunk Streaming

Description

A real-time infinite-world game where the world stays alive even when players disconnect. Combines GRU-based movement prediction, personalized Gemma-3 chat models with LoRA fine-tuning, DPO preference learning, chunk-based world streaming, YOLO-based perception, daily adaptation loops, and Matryoshka quantization for performance. Includes layered engine: GRU world model + personalized chat + real-time behavior engine.

Team Members

Cohort: Data Science Bootcamp 2025 (Data)

Responsibilities:

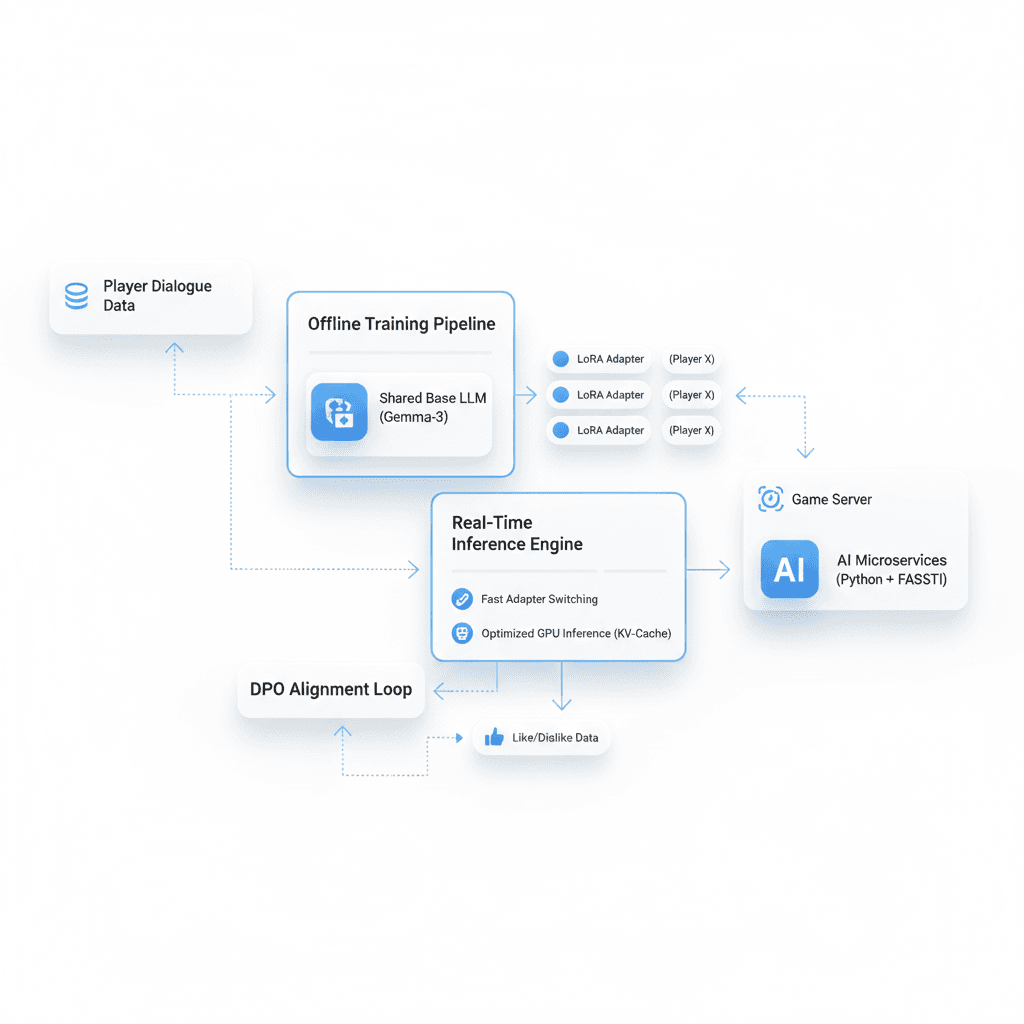

Server-side Inference Pipeline: Designed and implemented the server-side inference core using Python and asyncio, with a stable asynchronous processing flow. Integrated WebSocket for real-time frontend communication and full GEMMA model integration for fast and reliable inference with optimal GPU usage.

Custom LoRA Adapters: Developed LoRA adapters enabling each player to have unique speech styles and personalities. Managed loading, execution, and GPU allocation, performing light Fine-Tuning on the GEMMA model per player while ensuring stability and multi-player performance.

Real-time Chat Interface: Built the chat interface using React and TypeScript, fully synchronized via WebSocket. Handled message display, user interactions, animations, and connected chat to game events and server-side inference.

Full Frontend-Backend Integration: Implemented two-way communication with FastAPI and WebSocket, including real-time message processing, error handling, and immediate AI response display, ensuring seamless synchronization under high load.

GPU Server Management & DevOps: Managed GPU server hosting GEMMA models and LoRA adapters, including Bash user setup, permissions, Docker deployment, monitoring RAM, VRAM, disk usage, resource optimization, and team development environment maintenance.

DPO Infrastructure Implementation: Studied Direct Preference Optimization (DPO) and presented findings. Built a DPO system for the game, collecting user feedback ("likes" on chat messages) and preparing it for future Fine-Tuning, using a React interface connected to FastAPI for seamless integration into the inference system.

Research: Study on DPO Reading the paper Direct Preference Optimization: Your Language Model is Secretly a Reward Model, preparing a summary presentation of the findings, and presenting it to the team and audience.

Task: Building infrastructure for DPO implementation in NanoVerse Developing a foundation for applying DPO principles in the NanoVerse game, including a mechanism where users can like messages to provide real feedback for model improvement, and preparing the infrastructure to integrate the model with the in-game chat system.

...and more contributions not listed here

Responsibilities:

Model Research & Architecture Selection : Evaluated Image Classification & Object Detection models, analyzing runtime and training complexity to select the optimal architecture

Backend Core Engineering & Board Encoding : Developed core FastAPI services, action-token logic, GRU model integration, and efficient 8-bit tensor board encoding.

Server Logic, Data Pipelines & Memory Optimization : Refactored backend logic, improved history logging using atomic writes, and optimized memory usage for large dynamic worlds.

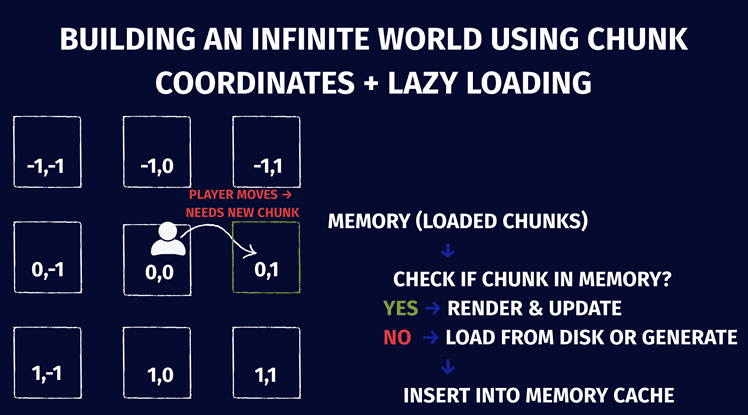

Scalable Microservices Architecture : Designed modular services (movement, sessions, world-state, messaging) and implemented chunk-generation for infinite world expansion.

Multi-Team Git Integration & Code Unification : Merged cross-team codebases, resolved structural conflicts, standardized API interfaces, and ensured consistent repository architecture.

UI Integration & Real-Time Sync : Connected TypeScript UI to backend endpoints and GRU predictions, enabling real-time updates and seamless infinite-world navigation.

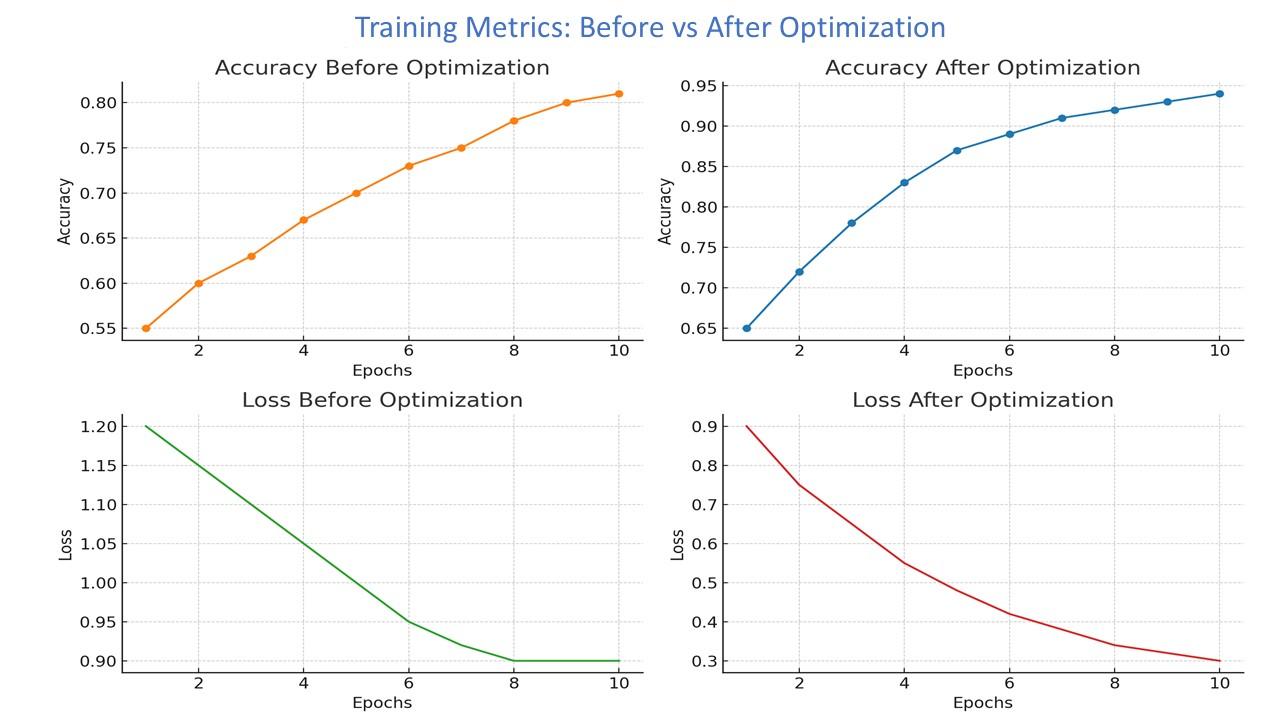

GRU Model Research & Variant Exploration : Benchmarked multiple GRU configurations and refined the model’s temporal-prediction role for gameplay sequence modeling.

GRU Training Optimization & Stabilization : Improved model accuracy through hyperparameter tuning, iterative experimentation, and convergence-stability enhancements.

Testing, Validation & Debugging Utilities : Created debugging tools and validation scenarios ensuring correct chunk loading, world transitions, and infinite-world stability.

Theory Application, Presentation & Cloud Deployment : Applied insights from The Universality Lens (Feder, Urbanke & Fogel, 2025), delivered a technical presentation, and deployed all services to production at nanoverse.me.

...and more contributions not listed here

Responsibilities:

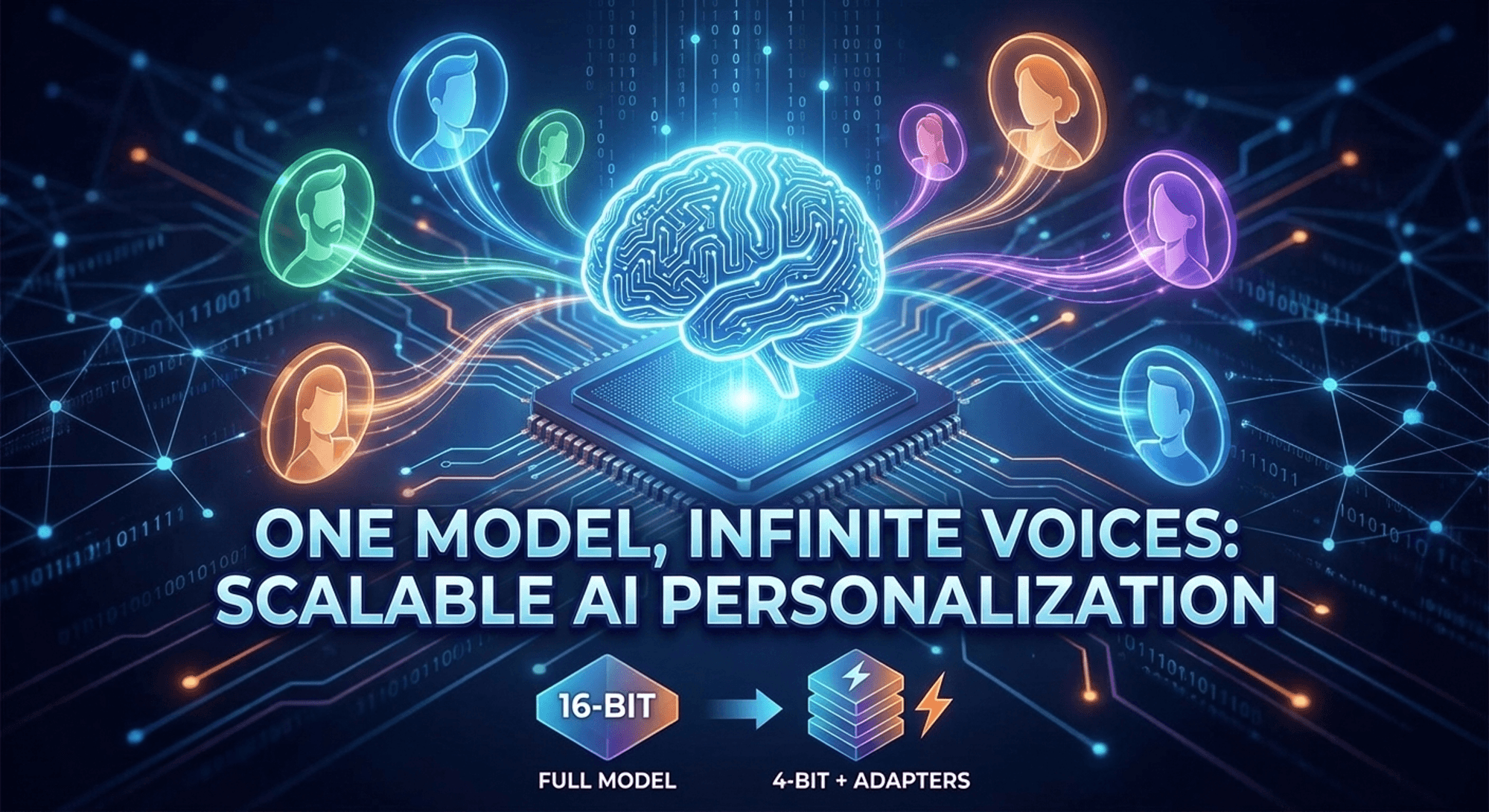

AI CORE & PERSONALIZATION STRATEGY: Spearheaded the transition from Prompt Engineering to advanced architecture based on Fine-tuning and LoRA. Developed intelligent Agents based on the Gemma-3 (1B/4B) model to emulate unique user styles. Built an automated Python End-to-End Training Pipeline, including dataset creation and SFT (Supervised Fine-Tuning) to continuously improve model quality.

REAL-TIME ARCHITECTURE & OPTIMIZATION: Developed a synchronous chat system with smart Fallback logic to detect timeouts and maintain flow. Wrote optimized Low-Latency Inference code in Python allowing real-time execution on a single RTX 2080 Ti. Implemented Advanced Resource Management including rapid Adapter Switching and Caching mechanisms to prevent GPU bottlenecks.

BACKEND & INFRASTRUCTURE MANAGEMENT: Built the server-side in Python using FastAPI to manage system interactions. Integrated coding agents to assist in developing UI components and logic. Managed DevOps & Server Maintenance, handling GPU processes, storage cleanup, and Environment Configuration for the shared development team.

THEORETICAL RESEARCH & KNOWLEDGE TRANSFER: Conducted an independent Deep Dive into 'Matryoshka Representation Learning', analyzing advanced Embedding architectures and efficiency implications. Synthesized research insights into a professional lecture and comprehensive presentation, delivering the content to a wide forum.

Implementation of client-side logic for managing chat state Created client-side logic in React and TypeScript for managing chat state, synchronizing messages between players across the dynamic game map, and maintaining consistent communication driven by GEMMA adapters.

Management of the GPU server Served as the GPU server DevOps hosting GEMMA models, including creating and maintaining bash user accounts, monitoring disk, RAM, and GPU VRAM usage, deploying Docker containers for AI services, and efficiently allocating limited resources across all game services.

Reading and Deepening Understanding of a Research Paper on Matryoshka Quantization As part of the research, I read and delved into a paper on Matryoshka Quantization, analyzing the core methods, their impact on model performance, and evaluating limitations and potential applications. The work included extracting professional insights, preparing a presentation, and delivering it to an audience, demonstrating a deep understanding of quantization principles and their relevance to complex AI models.

...and more contributions not listed here

Responsibilities:

Built backend systems for an infinite world with dynamic chunk generation, efficient board allocation, and implemented on-demand chunk loading with smart caching to optimize memory usage and response times.

Designed and 8-bit tensor-based board structure, replacing complex objects and significantly improving performance and GPU efficiency.

Trained a GRU model that learns player behavior and predicts actions in real time with optimized GPU inference.

Unified codebases from two teams, resolved conflicts, standardized folder structures, and aligned shared APIs.

Handled deployments, GPU operations, and system performance monitoring on shared Linux servers

Captured real user actions and applied behavior cloning to enable human-like real-time decision making.

Documented system design, microservices, integration flows, and scaling considerations.

Explored YOLO architecture and implemented a practical demo using Ultralytics' pretrained model.

...and more contributions not listed here